Networking is an important concept to understand if you wish to be comfortable when working with docker. When you create an application depending upon the use case your container may need to communicate with other containers in a network, connect with the internet, or be an isolated container. By the end of this article, you will come to know about different networking options in docker and how to use them to spin up the containers.

Docker has built-in network drivers with different functionalities.

The focus of this article is going to be about Birdge, Host, and Null network driver. Overlay and Macvlan will be covered in a separate article.

Get List Of Drivers

To get the list of network drivers, use the “network ls” command. From the below output you can see in the driver section there are three drivers with a name and network ID associated with them.

$ docker network lsInspecting Network Drivers

You can run the inspect command on each network driver which will print information related to the particular driver. The output of the inspect command will be in Json format. If you have jq installed in your machine you can use it to grab particular data from the output.

There are two important sections you should look out for from the inspect command output. First is the “Config” section which will tell you the allocated network address and gateway IP.

$ docker network inspect bridge | jq .[0]."IPAM"."Config"The second parameter is “Containers”. If you launch any containers under the specific network, container information will be available in the containers section.

$ docker network inspect bridge | jq .[0]."Containers"Docker Bridge Network

Any containers you create by default will be using the default bridge network. You might have noticed that when you create a container, the IP address will be automatically assigned to the container and that is because it becomes part of the default bridge network and the IP address is assigned from the default bridge network subnet.

Run the inspect command on the default bridge network driver to get the information like subnet, and gateway IP.

$ docker network inspect bridge | jq .[0]."IPAM"."Config"From the above image, you can see that the bridge network has the subnet value of 172.17.0.0/16 and the gateway address was 172.17.0.1. So if you launch any container under the default bridge network the IP address 172.17.0.X will be allocated.

Docker uses a software-defined bridge and creates an interface named docker0 which will act as a gateway between the host and the container. Any traffic sent to the container through the host machine will travel through this gateway.

Launch your terminal and type the following command and you will find an interface with the name docker0. Docker creates this interface by default as part of the bridge network.

$ ip a s Create User-Defined Bridge Network

You can also create a user-defined bridge network where you can allocate your own subnet, gateway, and other protocol information. To create a new bridge network run the following command. Here I am creating a network named “nixzie”.

# Syntax

$ docker network create <network-name>

$ docker network create nixzieRun the following command to see the network driver created.

$ docker network lsDocker will also create a network interface for every user-defined bridge you create. As foretold this bridge will act as the gateway in sending the traffic from the docker host to the container for that particular network.

Launch Containers Under Default & User-Defined Bridge Network

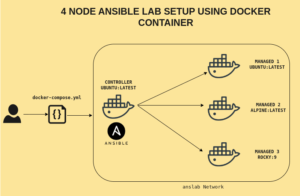

Now we know how to create a network bridge we will quickly spin up some containers and understand how the communication is happening between the containers.

When creating containers use the “–network” flag to attach the container to a custom network otherwise it will be part of the default bridge network. In my case I created three containers where the first container will be part of the default bridge network and the second and third containers will be part of the custom network(nixzie) I created.

$ docker container run -dt --name ubuntu_default_bridge ubuntu

$ docker container run -dt --name ubuntu1_nixzie_bridge --network nixzie ubuntu

$ docker container run -dt --name ubuntu2_nixzie_bridge --network nixzie ubuntuTo connect to any running container run the following command.

$ docker container run -it <container_name> bashI am using a custom ubuntu image where all the network utils are already installed. In your case, if you pull the ubuntu image from the docker hub you need to install these utils.

$ apt update

$ apt install iputils-ping dnsutils net-tools -yAutomatic DNS resolution

There are significant differences when using the default docker network and the user-defined bridge network. The user-defined network provides automatic DNS resolution. This means you can communicate with other containers in the same network using their IP address, Container ID, or container name. In the default bridge network, you can only access other containers using their IP unless you link them using the –link command.

Connect to any one container part of the nixzie network and try to ping the second container using the container name. You can also run the nslookup command to see the name resolution.

Network Isolation

Any containers that are part of the same network can communicate with each other. You cannot communicate with containers running in other networks. Connect to any container that is part of the user-defined network and try to ping the container running under the default bridge network and you will see no connection.

Attach & Detach

You can attach and remove containers from a particular user-defined network without stopping them. But this is not the case with containers in the default bridge network. You have to recreate the container with a different network.

The following commands will attach and detach a running container from the user-defined bridge network.

$ docker network connect <networkname> <containername>

$ docker network disconnect <networkname> <containername>Remove User-defined Bridge Network

You need to make sure there are no active containers when running this command. The bridge interface which was created as part of this network will also be removed.

To remove a user-defined bridge network run the following command.

# syntax

$ docker network rm <network-name>

$ docker network rm nixzieYou can also run the following command which will remove all the user-defined networks in your machine that are unused.

$ docker network pruneDocker Host Network

Docker host network is only available in linux and not available for docker desktop on windows and Mac. In the host network mode, docker will not provide any isolated network stack instead the containers will be part of the host machine network where the docker daemon is running.

When using the bridge network you need to forward the ports from the host -> container which will create a proxy for each port. In host networking mode you cannot do port forwarding and even if you try to forward ports it will not work. This is because the container will listen directly to the host port as if it is running as a process in the host.

To launch any container under the host network all you have to do is set the flag –network=host.

$ docker container run -dt --name ubuntu_host_network --network=host ubuntuDocker None Network

The “none” network uses the null driver. When you spin up the container using the none network your container will not be part of any network. Just loopback interface will be assigned to the container.

$ docker network ls -f driver=null

NETWORK ID NAME DRIVER SCOPE

e677567e4352 none null localLet’s quickly spin up an ubuntu container for demonstration and you will not see any

$ docker container run -dt --name ubuntu_none_network --network none ubuntuThe container is up and running. Now connect to the container and run the ifconfig command. You will not see any interface other than the loopback interface(lo).

$ docker exec -it ubuntu_none_network bash

$ ifconfigConclusion

In this article, we have seen the docker network stack, especially how to work with bridge, host, and none network. Macvlan and Overlay networks are not covered in this article and they will be covered in a separate article.